Welcome to the second part of our blog series on gRPC using Python. In our previous blog, we talked about the basics of gRPC, its architecture, and its various components. In this blog, we will be going through how to write a gRPC client & server so in Python.

As a quick recap, gRPC is an open-source, high-performance, and language-agnostic remote procedure call (RPC) framework. It uses Protocol Buffers as the default serialization mechanism, making it more efficient and scalable compared to traditional RPC mechanisms. In this blog, we will dive deeper into the practical implementation of gRPC using Python. We'll cover topics such as setting up, implementing Unary and Streaming RPCs, and how to implement a client and server using gRPC in Python. So, let's get started!

Note: The code in this blog can be found at: https://github.com/radioactive11/grpc-blog

Setup 🔧

For this blog, I am assuming that you already have Python setup. First, you need to install the the grpcio-tools package. This package includes the protocol buffer compiler (protoc) plugin for generating gRPC code in Python.

$ pip install grpcio-tools

Defining the Service 🗂️

Next, we write the .proto file defining the gRPC service, including the messages and methods that the client and server will use to communicate. This file uses the Protocol Buffers syntax and contains a set of rules for defining messages, service endpoints, and RPC methods. that the service will use.

For this example setup, we will use all 4 kinds of communication between the server and the client:

Unary RPC: This is the simplest form of RPC where the client sends a single request to the server and gets a single response back.

Server streaming RPC: In this type of RPC, the client sends a request to the server and gets a stream of responses in return. The server sends a sequence of messages to the client and the client reads them one by one until the server finishes sending them.

Client streaming RPC: This is the opposite of server streaming RPC. The client sends a stream of requests to the server and gets a single response back.

Bidirectional streaming RPC: In this type of RPC, both the client and the server send a stream of messages to each other. The client sends a sequence of messages to the server and the server sends a sequence of messages back to the client. Both the client and server can read and write messages simultaneously, making it a bidirectional stream.

Here is the .proto file we will be using in this example project.

// Syntax Version

syntax = "proto3";

// Definition of a message

message ping {

string client_message = 1;

}

// Definition of another message

message pong {

string server_message = 1;

}

// Definition of service

service PingPongService {

rpc unary_ping(ping) returns(pong) {};

rpc streaming_ping(stream ping) returns(pong) {};

rpc streaming_pong(ping) returns (stream pong) {};

rpc streaming_ping_pong(stream ping) returns (stream pong) {};

}

First, we define the syntax version we are going to use. The syntax declaration specifies the version of the Protocol Buffers language that the .proto file is using. The current version is "proto3".

Next, we define the message schema. The message can contain multiple fields, which are defined using a field number, a data type, and a name. In our example, we only have one field per message type. Here is an example of a message definition with multiple fields per message:

message Person { int32 id = 1; string name = 2; int32 age = 3; }Next, we define the

services. You can think of these as functions that the client machine can call but will be executed on the machine. Here is an example of a server-side streaming RPC that takes a request message of the typeMyRequestand returns a stream of responses of typeMyResponserpc MyServerStreamingMethod(MyRequest) returns (stream MyResponse) {}And that's it! We now know how to generate a basic

.protofile with message and service definition. Now we can move on to the next step.

Generate Stubs 🏗️

Now, we will use the protoc compiler to generate Python code from the .proto file. This will generate stubs that we can use to implement the service.

python3 -m grpc_tools.protoc -I ./proto --python_out=. --grpc_python_out=. ./proto/schema.proto

Here is what this command does:

-I ./proto: This flag specifies the directory where the .proto file is located.--python_out=.: This flag specifies the directory where the generated Python code for the message types will be stored. In this case, it's the current directory (.).--grpc_python_out=.: This flag specifies the directory where the generated Python code for the gRPC service will be stored. Again, it's the current directory (.)../proto/schema.proto: This is the path to the .proto file that you want to compile.

This will generate two Python files in the current directory, schema_pb2_grpc.py and schema_pb2.py

Creating the Server 🌐

Creating the server is majorly made of two parts:

Implementing the functions which we defined in the service definition that "actually" perform the task.

Running a gRPC server to listen for requests from clients and return responses.

We'll start by doing the easiest part of this blog, creating a new file: server.py and importing the libraries.

import threading

import time

from concurrent import futures

import grpc

from loguru import logger

import schema_pb2

import schema_pb2_grpc

Next we define a class SchemaService which inherits from schema_pb2_grpc.PingPongServiceServicer .

class SchemaService(schema_pb2_grpc.PingPongServiceServicer):

pass

Inside this class, we will define the 4 functions which we defined in the .proto file.

Unary

The client sends a request to this method. The method extracts the

client_messagefrom the request. Then it creates a newpongmessage using theserver_messagefield from the request and returns it as a response to the client.def unary_ping(self, request, context): message = request.client_message logger.info(f"Client said: {message}") response_obj = schema_pb2.pong(server_message=f"{message}") return response_objClient Stream

The client sends a stream of requests which is a continuous sequence of messages (in this case, lyrics of a song). Once all the messages have been received, the server responds with a

schema_pb2.pongmessage that contains the string "Nice song!" as the value of itsserver_messagefield.def streaming_ping(self, request_itr, context): for item in request_itr: logger.info(item) return schema_pb2.pong(server_message="Nice song!")Server Stream

This is a gRPC server-side streaming function that receives a request with a client message, opens a file called "lyrics.txt", reads it line by line and sends each line as a response to the client with a delay of 3 seconds between each line. It uses a generator to yield the responses back to the client.

def streaming_pong(self, request, context): logger.info(f"Client said: {request.client_message}") with open("./lyrics.text", "r") as fdr: for line in fdr: response_obj = schema_pb2.pong(server_message=line.strip()) time.sleep(3) yield response_objClient & Server Stream (Bi-directional)

In this case, for each request item in the request iterator, the function logs the client message, creates a response object with a "Nice song!" server message, and then yields the response object back to the client. The stream of responses is sent back to the client in a continuous stream until the server finishes processing or the client terminates the connection.

def streaming_ping_pong(self, request_iterator, context): for item in request_iterator: logger.info(f"Client said: {item}") response_obj = schema_pb2.pong(server_message="Next line...") time.sleep(3) yield response_obj

Starting the gRPC server

Now, we will define the function which will listen to incoming requests, so that the client can use these functions.

def server():

server = grpc.server(futures.ThreadPoolExecutor(max_workers=3))

schema_pb2_grpc.add_PingPongServiceServicer_to_server(SchemaService(), server)

server.add_insecure_port("[::]:8080")

server.start()

while True:

try:

logger.debug(f"Server Running, threads: {threading.active_count()}")

time.sleep(5)

except KeyboardInterrupt:

server.stop(0)

logger.critical("Terminating Server")

break

In this function, we are:

defining the gRPC server using the

grpc.serverfunction with a maximum of 3 worker threadsadding the

SchemaServiceclass as a servicer to the serverlistening on port 8080 and starting the server.

indefinitely running the server until we get a Keyboard Interrupt (Ctrl + C)

Creating the Client 👥

Again, let's start by creating a new Python file: client.py and importing the libraries.

import os

import grpc

from loguru import logger

import schema_pb2

import schema_pb2_grpc

To call service methods, we first need to create a stub.

def run():

with grpc.insecure_channel("[::]:8080") as channel:

stub = schema_pb2_grpc.PingPongServiceStub(channel)

Now we create the options that the user can choose from.

option: int = 1

while True and option != 0:

option = int(

input(

"1 for unary\n2 for client streaming\n3 for server streaming\n4 for bidirec\n0 for exit"

)

)

Now let's define all 4 request types one by one.

Unary

We create the request_payload using the input user provided and send it to the server. We then log the response from the server.

if option == 1: message = input("Enter message: ") request_payload = schema_pb2.ping(client_message=message) response = stub.unary_ping(request_payload) logger.info(f"Server said {response.server_message}")Client Stream

We open a text file called

lyrics.txtand yield the lines until the file ends. This is done to simulate a streaming-like operation 😅. After we have finished streaming data to the server, we finally log the response.def sing(): with open("./lyrics.text", "r") as fdr: for line in fdr: request_obj = schema_pb2.ping(client_message=line.strip()) yield request_objelif option == 2: server_response = stub.streaming_ping(sing()) logger.info(f"Server said: {server_response}")Server Stream

Similar to the unary request, we send a request to the server. The only difference is instead of returning a single response, the server returns a stream of

pongobjects.elif option == 3: request_payload = schema_pb2.ping(client_message="Sing a song") try: for i in stub.streaming_pong(request_payload): print(i) except Exception as e: print(e)Bi-directional Streaming

This is example is a combination of client & server-side streaming. We use our old generator to read and yield lines from the text file. Meanwhile, after every line, we log the response from the server.

elif option == 4: responses = stub.streaming_ping_pong(sing()) try: for response in responses: print(response) except Exception as e: print(e)Running the Code 👾

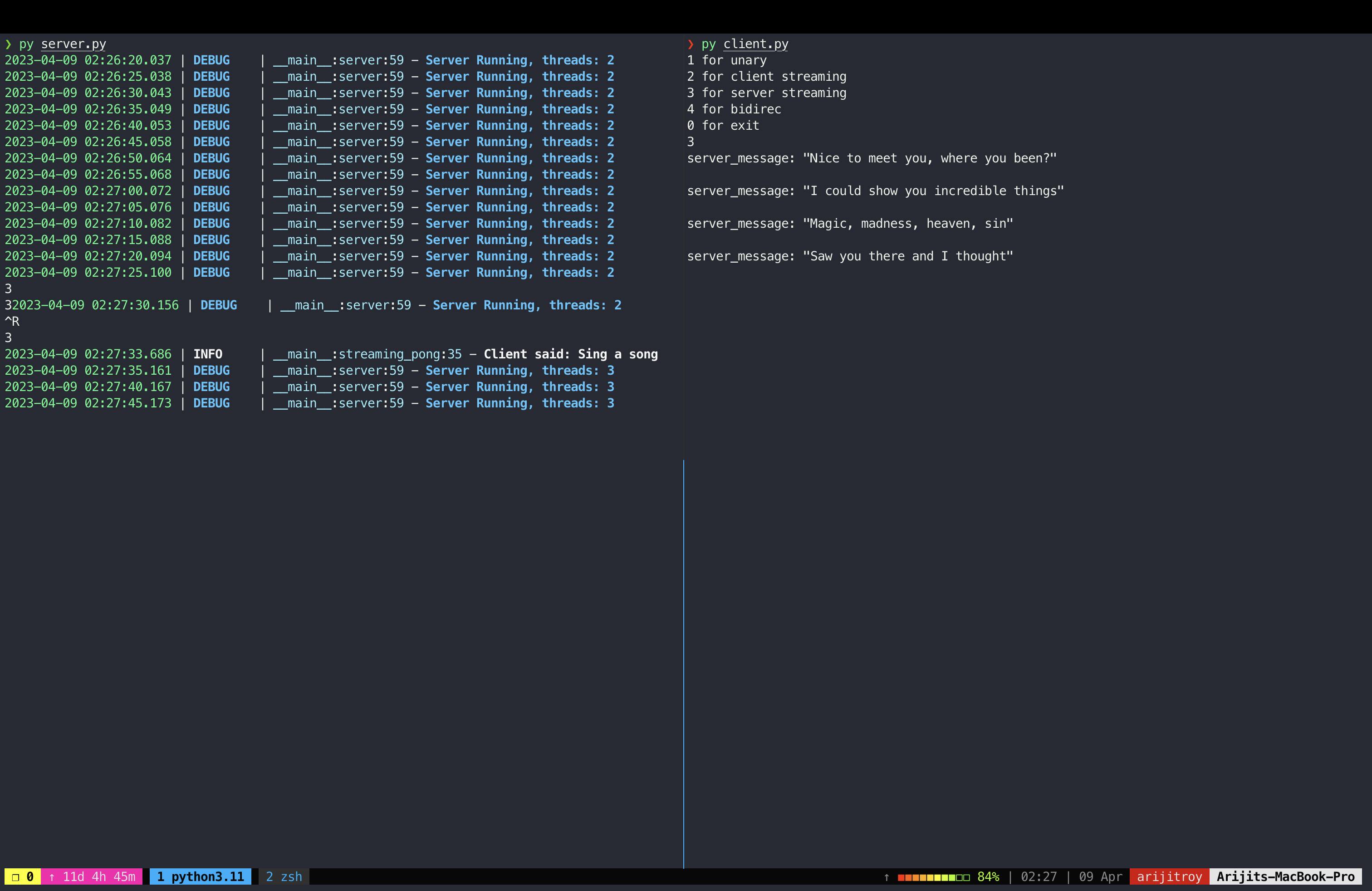

To run the code, open two terminal tabs and run

server.pyin one andclient.pyin the other one.If everything works, you will see something like this.

Now, you can choose any option (from 1-4). For example, let's try a server streaming operation.

Since this is a server stream RPC, the server is going to send a stream of messages.

End of Part II

In this blog, I have covered the basics of setting up a gRPC server and client in Python and explored the different types of communication that are possible with gRPC. I hope that this blog has given you a good introduction to gRPC and has inspired you to explore this technology further.

With the increasing popularity of microservices, it is clear that gRPC will continue to play an important role in modern application development ✌🏼

Also, thanks to Esha Baweja for the amazing banner ✨